Using the Python Keras multi_gpu_model with LSTM / GRU to predict Timeseries data - Data Science Stack Exchange

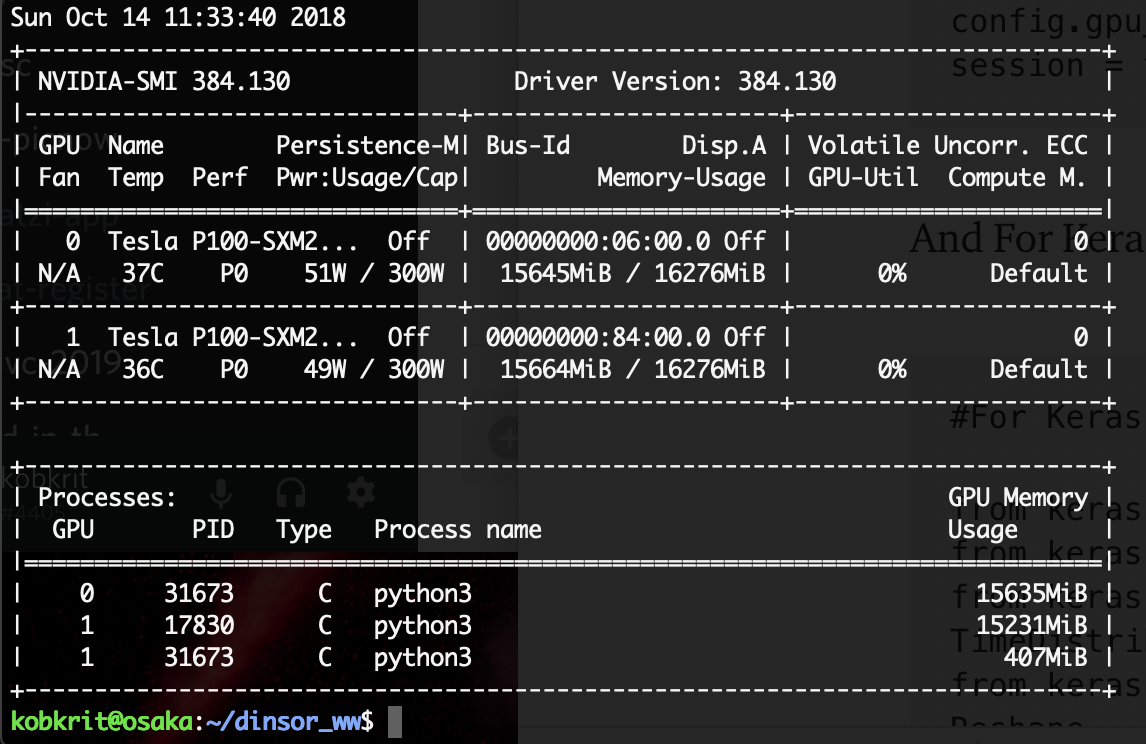

What are current version compatibility between keras-gpu, tensorflow, cudatoolkit, and cuDNN in windows 10? - Stack Overflow

Interaction of Tensorflow and Keras with GPU, with the help of CUDA and... | Download Scientific Diagram